When it comes to the various parameters of LCD monitors – this topic is regularly discussed in our articles as well as on every hardware resource that deals with monitors among other things – three levels of discussion can be distinguished.

Top Rated Budget Laptops

Last update on 2025-03-10 / Affiliate links / Images from Amazon Product Advertising API

The basic level is, “Does the manufacturer try to fool us?” This question has a trivial answer. Serious manufacturers of monitors don’t come down to mere lies.

The second level is more complicated, “What do the declared parameters mean, anyway?” This boils down to discussing how the parameters are measured by the manufacturers and what practical constraints on the applicability of the measurement results there exist. For example, the response time parameter was defined in the ISO 13406-2 standard as the total time it takes an LCD matrix to switch from black to white and to black again. Tests prove that for every matrix type this transition takes the least amount of time whereas a transition between two tones of gray may take much longer and the matrix won’t look as fast as its specs suggest. This example doesn’t belong with the first level of discussion because the manufacturer can’t be said to lie to us: if you select the highest contrast setting and measure the “black-white-black” transition, it will coincide with the specified response time.

But there is an even more interesting level of discussion. It’s about how our eyes perceive this or that parameter. Putting monitors aside for a while (to return to them below), I can give you an example from the acoustics field. From a purely technical point of view, vacuum-tube amplifiers have rather mediocre parameters (like a high level of harmonics, poor pulse characteristics, etc), so they don’t reproduce sound accurately. However, many listeners are fond of the sound of tube-based equipment. Not because it is objectively better than that of transistor-based equipment (as I’ve just said, it is not true), but because the distortions it brings about are agreeable to the ear.

Of course, the peculiarities of perception only come into view when the parameters of discussed devices are good enough for such peculiarities to matter. You can take $10 multimedia speakers and they won’t sound any better whatever amplifier you connect them to just because their own distortions are grosser than the flaws of any amplifier. The same goes for PC monitors. When the matrix response time amounted to dozens of milliseconds, there was no point in discussing how the human eye perceives the onscreen image. But now that the response time has shrunk to a few milliseconds, it turns out that the monitor’s speed – not its specified speed, but its subjective speed as it is perceived by the eye – is not all about milliseconds.

In this article I will cover some specified parameters of monitors (how they are measured by the manufacturers, how relevant they are for practical uses, etc) and some issues pertaining to the peculiarities of the human vision (this mainly refers to the response time parameter).

Response Time of Monitors and Eyes

You could have read in old reviews of LCD monitors that as soon as their response time (the real response time as opposed to the specified value which, if measured according to ISO 13406-2, is not in fact indicative of the real speed) was lowered to 2-4 milliseconds, we would forget about it altogether just because its further decrease wouldn’t make anything better – we wouldn’t see the fuzziness anyway.

And finally such monitors are here. The latest models of gaming monitors on TN matrixes with response time compensation (RTC) technology have an average (GtG) response time of only a few milliseconds. I will put such things as RTC artifacts and inherent drawbacks of TN technology aside for now. What’s important is that the mentioned numbers are indeed achieved. But if such a monitor is put next to an ordinary CRT monitor, many people will say the CRT is still faster.

Strangely enough, it doesn’t mean we have to wait for LCD monitors with a response of 1 or 0.5 or less milliseconds. Well, you can wait for them, of course, but such panels won’t solve the problem. Moreover, they won’t differ much from today’s 2-4ms models because the problem is not about the panel, but about the peculiarities of the human vision.

Everyone knows about such a thing as persistence of vision. Take a look at a bright object for a couple of seconds, then close your eyes and you will be seeing a slowly fading-out “imprint” of the image of that object for a few more seconds. The “imprint” is rather vague – just the object’s contour – but it lasts as long as seconds! As a matter of fact, the retina retains a precise image of an object for 10-20 milliseconds after the object itself had disappeared. It’s only after that time that the image is fading out quickly, leaving just a contour if the object has been bright.

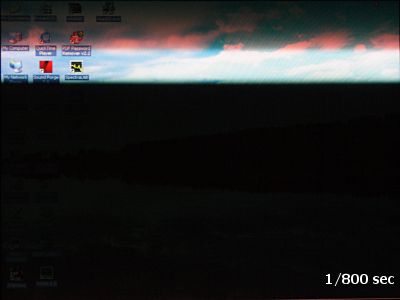

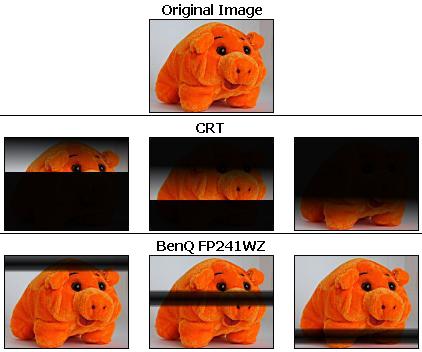

This effect comes in handy for CRT monitors. It’s thanks to this persistence of vision that we don’t notice the flickering of the screen. Phosphors in today’s cathode-ray tubes have an afterglow time of about 1 millisecond. The electronic beam makes its way through the whole screen in 10 milliseconds (at a scan rate of 100Hz). So, if our eye didn’t have any persistence, we’d see a light band, only one tenth of the screen in width, running from top to bottom. This can be easily demonstrated by photographing a CRT monitor at different exposure times.

At an exposure value of 1/50 seconds (or 20 milliseconds) we can see an ordinary image that occupies the entire screen.

At an exposure value of 1/200 seconds (or 5 milliseconds) there appears a wide dark band on the image. The beam has only passed through half the screen during that time (at a scan rate of 100Hz) and the phosphors have already gone out in the other half of the screen.

And finally, at an exposure value of 1/800 seconds (or 1.25 milliseconds) we see a narrow bright band running on the screen. This band is leaving a small and quickly fading trail whereas most of the screen is black. The width of the bright band is determined by the phosphor afterglow period.

On one hand, this behavior of phosphors makes us set high refresh rates on CRT monitors (at least 85Hz for today’s tubes). But on the other hand, this relatively low afterglow time of phosphors makes CRT monitors faster than even the fastest of LCD monitors.

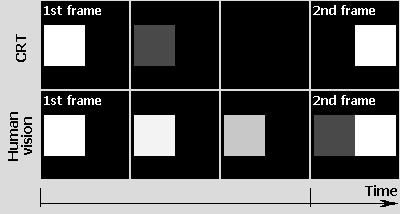

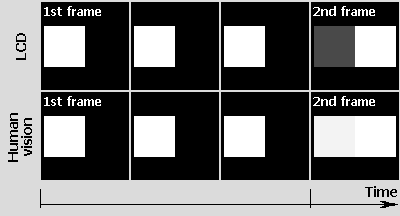

Let’s take a simple case of a white square moving across a black screen like in one of the tests of the popular TFTTest program. Consider two sequential frames in which the square moves from left to right by one position:

I tried to picture four sequential “snaps”: the first and last of them are the moments the monitor displays the two sequential frames while the other two snaps illustrate the behavior of the monitor and our eyes in between the displayed frames.

In the case of a CRT monitor, the square is displayed in the first frame, but begins to fade out rapidly after 1 millisecond (the phosphor afterglow time), so it has vanished from the screen completely before the second frame arrives. But thanks to persistence of our vision we continue to see this square for about 10 milliseconds more so that it only begins to fade out noticeably by the arrival of the second frame. The moment the second frame is being drawn by the monitor, our brain is receiving two images at once: a white square in the new position and a rapidly fading image of it (left on the eye retina) in the old position.

Active-matrix LCD monitors do not flicker, as opposed to CRT monitors. They store the image through all the period between the frames. On one hand, this solves the refresh rate problem (the screen does not flicker at any frequency), but on the other hand – just take a look at the illustration above. The image was rapidly fading out on the CRT monitor between the two frames, but it remains unchanged on the LCD panel! When the second frame arrives, the monitor displays the white square in the new position and the old frame takes about 1-2 milliseconds to fade out (that’s the typical pixel fall time of modern fast TN matrixes – comparable to the phosphor afterglow time of CRT monitors). But the retina of the eye is going to retain the old image for 10 milliseconds after the real image has disappeared! And through all this time the “imprint” is combined with the new image. As a result, the brain is receiving two images at once for about 10 milliseconds after the second frame has arrived: the real picture of the second frame from the monitor’s screen plus the imprint of the first frame on the retina. That’s not unlike the well-known fuzziness effect, but the old image is now stored not in the slow monitor matrix, but in the slow retina of your own eye!

To cut it short, when the LCD matrix’s own response time is below 10 milliseconds, its further reduction brings a smaller effect than might be expected because the persistence of human vision enters the play as an important factor. Moreover, even if the LCD monitor’s response time is reduced to tiny values, it will still look slower subjectively than a CRT. The difference is about the moment the time of storing the after-image in the eye retina is counted from: with CRT monitors it’s the time the first frame arrives plus 1 millisecond. With LCD monitors, it’s the time the second frame arrives. Thus, the difference between the two types of monitors amounts to about 10 milliseconds.

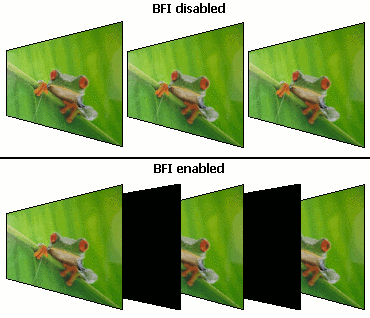

The way to solve this problem seems obvious. If the CRT screen looks fast due to its being black for most time between two sequential frames, which allows the after-image to begin to fade out on the eye retina just by the moment the new frame arrives, we should deliberately insert additional black frames into the image frames on LCD monitors.

BenQ has already implemented this idea in its Black Frame Insertion (BFI) technology. A BFI-enabled monitor is supposed to insert additional black frames into the output image, thus emulating the operation of an ordinary CRT.

At first, the extra frames were supposed to be inserted by changing the image on the matrix rather than by turning out the backlight. This would work on fast TN matrixes, but would not on MVA and PVA matrixes with their too slow transitions into black and back again. Such transitions take mere milliseconds on today’s TN matrixes, but take as long as 10 milliseconds on even the best monitors on *VA matrixes. Thus, the insertion of a black frame would take longer on them than the period of repetition of the main image frames, and BFI technology wouldn’t work. Moreover, it’s not even the frame repetition period (16.7 milliseconds at 60Hz, which is the standard refresh rate of LCD monitors) but our eyes that limit the maximum duration of the black frame. If the black frames last too long, the monitor will be flickering just as a CRT monitor with a scan rate of 60Hz does. I don’t think anyone would like it.

By the way, it’s not quite correct to say that BFI doubles the frame rate as some reviewers claim. The matrix’s own frequency grows up according to the number of added black frames, but the image frame rate still remains the same. And from the graphics card’s point of view, nothing changes at all.

So when BenQ introduced its FP241WZ monitor on a 24” PVA matrix, it turned to have a different technology that served the same purpose: the black frame is inserted not with the matrix, but with the backlight lamps. The lamps just go out for a while at the necessary moment.

This implementation of BFI is not affected by the matrix’s response time, so it can be successfully employed in TN as well as any other matrixes. Talking about the FP241WZ, it has 16 horizontal independently-controlled backlight lamps behind the matrix. As opposed to CRT monitors with their bright band of scanning running through the screen as you’ve seen above in the photographs with a low exposure value, BFI uses a dark band. At each given moment, 15 out of the 16 lamps are alight, and one is turned off. Thus, a narrow dark band runs across the screen of the FP241WZ during each frame:

Why did they choose this implementation rather than lighting up only one lamp (this would emulate a CRT monitor exactly) or turning off and lighting up all the lamps at once? Modern LCD monitors work at a refresh rate of 60Hz, so an attempt to accurately copy a CRT would produce a badly flickering picture. The narrow dark band is synchronized with the monitor’s refresh rate (the matrix above each lamp shows the previous frame the moment before the lamp is turned off, but when this lamp is lit up again, the matrix already shows the new frame) and this partially makes up for the above-described persistence-of-vision effect, but without a noticeable flicker.

This modulation of the backlight lamps worsens the monitor’s max brightness somewhat, but that’s not a problem as modern LCD monitors have a good reserve of brightness (it may be as high as 400 candelas per sq. m in some models).

We haven’t yet tested the FP241WZ in our labs, so I can only quote a review published by the respectable BeHardware (“BenQ FP241WZ: 1st LCD with screening”). Vincent Alzieu writes there that the new technology indeed improves the subjective perception of the monitor’s response time, but although only one out of the 16 backlight lamps is off at any given moment, a flickering of the screen can be noticed in some cases, particularly on large solid-color fields.

I guess this is due to an insufficiently high refresh rate. As I wrote above, the switching on and off of the backlight lamps is synchronized with it, so the full cycle takes as long as 16.7 milliseconds (at 60Hz). The human eye’s sensitivity to flickering depends on many conditions (for example, it’s hard to see the 100Hz flicker of an ordinary luminescent lamp with an electromagnetic ballast if you are looking straight at it, but much easier if it is in the area of your peripheral vision), so it may be supposed that the monitor still lacks more vertical refresh frequency, even though the use of 16 backlight lamps brings an overall positive effect. As all of us well know from CRT monitors, if the whole screen was flickering at 60Hz, you wouldn’t need to look for the flickering specially and it would be a torture to work at such a monitor.

An easy way out of this trouble is to transfer LCD monitors to a refresh rate of 75 or even 85Hz. You may argue that many monitors already support 75Hz, but I have to disappoint you: this support is implemented only on paper in most cases. The monitor is receiving 75 frames per second from the PC, but is discarding each fifth frame to display 60 frames per second on the matrix. This can be proved by photographing a rapidly moving object with a high enough exposure value (about 1/5 of a second, so that the camera caught a dozen of the monitor’s frames). On many monitors you will see that the photograph shows a uniform movement of the object on the screen at a refresh rate of 60Hz, but at 75Hz there are gaps in the movement. Subjectively this is perceived as a lack of smoothness of the movement.

Besides this obstacle, which is quite surmountable now that the monitor manufacturers have got to deal with it, there is one more: the higher refresh rate calls for a bigger bandwidth of the monitor interface. To be specific, monitors with native resolutions of 1600×1200 and 1680×1050 pixels will have to use Dual Link DVI to transition to a refresh rate of 75Hz since the operating frequency of Single Link DVI (165MHz) won’t be enough. This is not a crucial problem, yet it may impose certain restrictions on compatibility of monitors with graphics cards, especially older graphics cards.

What’s interesting, the refresh rate increase reduces the fuzziness effect by itself, the panel’s specified response time being the same. This is again related to the persistence of vision effect. Suppose that a picture moves by 1 centimeter on the screen during one frame period at a refresh rate of 60Hz (16.7 milliseconds). The next frame arrives and the retina sees the new picture plus the remaining imprint of the old picture with a shift of 1 centimeter between them. Now if the frame rate is doubled, the eye will fix frames with an interval of about 8.3 milliseconds rather than 16.7 milliseconds. It means the shift of the two images, old and new, relative to each other is twice shorter. From the eye’s point of view, the trail behind a moving object becomes two times shorter, too. Thus, a very high frame rate would give us the same picture as we see in real life, i.e. without any fuzziness.

It should be made clear, however, that a simple increase of the monitor’s refresh rate, as they did with CRT monitors to avoid the flicker, is not enough. It is necessary that all the frames are unique. Otherwise, the frame rate increase is useless.

This would produce a funny side effect in games. In most new 3D games a speed of 60fps is considered good even on up-to-date graphics cards, and an increase in the refresh rate of your LCD monitor won’t affect the fuzziness until you install a powerful graphics card, capable of running the game at a speed corresponding to the monitor’s refresh rate. In other words, the image fuzziness effect in games will depend on the graphics card after LCD monitors with a real refresh rate of 85 or 100Hz appear, although we’ve all got used to regard the fuzziness as depending only on the monitor’s properties.

It’s more complex with movies. Whatever graphics card you install, there is still 25fps, or 30fps at most, in movies. Thus, an increase in the monitor’s refresh rate won’t affect the fuzziness effect in movies. There is a solution: when reproducing a movie, additional frames can be created that would be the average of two sequential real frames. These frames are then inserted into the video stream. This approach will help reduce fuzziness even on existing monitors since their actual refresh rate of 60Hz is two times the frame rate of movies.

This is already implemented in the 100Hz LE4073BD TV-set from Samsung. It is equipped with a DSP that is automatically creating intermediary frames and inserting them into the video stream in between the main frames. On one hand, the LE4073BD indeed exhibits much lower fuzziness than TV-sets without such a feature, but the new technology produces a surprising side effect: the picture looks like soap operas with their unnaturally smooth movements. Someone may like it, but most people would prefer the minor fuzziness of an ordinary monitor to the new “soapy” effect, especially as the fuzziness of today’s LCD monitors in movies is already on the brink of being perceptible.

Besides all these problems, there will be purely technical issues. Thus, an increase of the refresh rate above 60Hz will call for the use of Dual Link DVI even in monitors with a resolution of 1680×1050 pixels.

Summarizing this section, I want to emphasize three main points:

- A) For LCD monitors with a real response time of less than 10 milliseconds, the further reduction of the response time brings a smaller effect than expected because the persistence of human vision becomes a factor to be accounted for. In CRT monitors the black period between the frames gives the retina enough time to “forget” the previous frame, but there is no such period in classic LCD monitors – the frames are following each other continuously. Thus, the further attempts of the manufacturers to increase the speed of LCD monitors will be about how to deal with the persistence of vision effect rather than about how to lower the specified response more. By the way, this problem concerns not only LCD monitors but also any other active-matrix technology in which the pixel is alight continuously.

- B) The most promising technology at the moment is the short-term turning off of the backlight lamps as is implemented in the BenQ FP241WZ. It is rather simple to implement (the only downside is the necessity to use a large number and a certain configuration of backlight lamps, but it’s a solvable problem for monitors with long screen diagonals). It is suitable for any type of the matrix and doesn’t have serious flaws. Perhaps the refresh rate of the new monitors will have to be increased to 75-85Hz, but the manufacturers may solve the problem of flickering (noticeable on the above-mentioned FP241WZ model) in other ways, so we should wait for other models with backlight blanking to appear.

- C) Generally speaking, today’s monitors (on any matrix type) are fast enough for a majority of users even without the mentioned technologies. So you should only wait for the arrival of models with backlight blanking if you are absolutely unsatisfied with the existing monitors.

Input Lag

The problem of a lag in displaying frames on some monitor models has been widely discussed on Web forums recently and seems to resemble the response time issue. However, it is quite a different effect. With ordinary fuzziness, the frame that the monitor receives is displayed at the same moment, but it takes some time for the frame to get drawn up fully. With the input lag effect, some time, a multiple of the monitor’s refresh rate, passes between the arrival of the frame from the graphics card and the moment is begins to be displayed. In other words, the monitor is equipped with a frame buffer – ordinary memory – that stores one or several frames. Each frame arriving from the graphics card is first written into the buffer and only then is displayed on the screen.

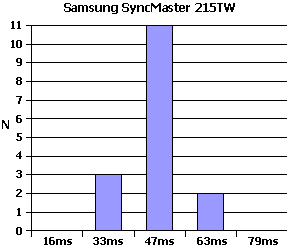

It’s simple to measure this lag. You should connect two monitors (a CRT and an LCD one, or two different LCD monitors) to two outputs of the same graphics card in Clone mode and then launch a timer that would count up milliseconds. Then make a series of photographs of the monitors’ screens. Then, if one monitor has an input lag, the showings of the timers in the photographs would differ by the value of the lag: one monitor is displaying the current timer showing, and the other is showing a value that has been true a few frames earlier. To get reliable results, you should make a score of photographs and discard those of them that obviously fell at the moments the frames changed. The diagram below shows the results of such measurements for a Samsung SyncMaster 215TW monitor (in comparison with an LCD monitor that has no input lag). The X-axis shows the difference in the showings of the timers on the screens of the monitors. The Y-axis shows the number of frames with the given difference.

A total of 20 photographs were made and 4 of them obviously fell at the moments of changing the frames (they showed two values on the timers, one from the older frame and one from the new frame). Two frames gave a difference of 63 milliseconds, three frames – 33 milliseconds, and 11 frames – 47 milliseconds. Thus, the correct lag value for the 215TW is 47 milliseconds or about 3 frames.

As a kind of digression, I want to say that I’m rather skeptical about some posts at forums whose authors claim an abnormally low or high input lag on their particular sample of a monitor. They just don’t accumulate enough statistics and make only one frame. As you have seen above, separate snapshots may catch a lag either higher or lower than the real value. The higher the camera exposure value, the higher the probability of such a mistake. To obtain real data, you need to make a couple of dozens of snapshots and then choose the most frequent lag value.

Well, this is all irrelevant for our shopping needs. We won’t go photographing timers on monitors we want to buy, will we? From a practical standpoint, the question is if this lag should be bothered about at all. Let’s take the above-mentioned SyncMaster 215TW with its input lag of 47 milliseconds. I don’t know of monitors with a higher input lag, so this example makes sense.

47 milliseconds is a very small time period in terms of human reaction speed. It is comparable to the time it takes the signal to travel from the brain to the muscles via nerve fibers. There’s a term in medicine that sounds like “the time of a simple sensorimotor response”. It is the time between an occurrence of some event the brain can easily process (for example, the lighting of a bulb) and a muscle reaction (a press on a button). For humans, this time is 200-250 milliseconds on average. During this time the eye registers the event and transfers information about it into the brain, the brain then identifies the event and sends an appropriate command to the muscles. A lag of 47 milliseconds doesn’t seem big in comparison with this number.

It’s impossible to notice this lag at everyday office work. You can try to catch the difference between the movements of the mouse on the desk and the movements of its pointer on the screen, but the time the brain takes to process these events and relate them to each other (note also that the watching of the movements of the pointer is a much more complex task than the watching for a bulb to light up as in the simple sensorimotor response test – and the reaction time is going to be longer) is so high that 47 milliseconds prove to be an insignificant value.

However, many users report that they feel the mouse pointer moves slowly on their new monitor. They also find it difficult to hit small buttons and icons at first attempt as they always used to do. And they put the blame on the input lag that was missing in the older monitor and is present in the new one.

But when you come to think of it, many people are now transitioning to new large models from either 19” models with 1280×1024 resolution or from CRT monitors. Let’s take a transition from a 19” LCD to the above-mentioned 215TW: the horizontal resolution has increased by a third (from 1280 to 1680 pixels), which means you have to shift your mouse farther to move its pointer from one edge of the screen to another – if the mouse settings have remained the same. That’s where the feeling of “slow pointer” comes from. You can get the same feeling on your current monitor by lowering the pointer speed in three times in the mouse driver’s settings.

It’s the same with the failure to hit the buttons on the new monitor. Our nervous system is too slow to catch the pointer-above-the-button moment with the eyes and transfer a nervous impulse to the finger that presses on the mouse’s left button before the pointer leaves the button. So, the accuracy of hitting the buttons is in fact reduced to a repetition of learned movements when the brain knows beforehand what movement of the hand corresponds to a specific movement of the pointer and with what delay the command to the finger should be sent so that the pointer was exactly above the necessary button when the finger presses the mouse. Of course, all these learned actions become useless when the resolution and the physical size of the screen change. The brain has to adjust itself to the new conditions and you’ll be indeed missing the onscreen buttons for a while until this adjustment is accomplished. But this is in no way related to the monitor’s input lag. Just like in the previous experiment, you can achieve the same effect by changing the sensitivity of your mouse. If you increase it, you’ll be racing past the necessary button. And if you decrease it, you’ll be stopping your pointer before reaching it. But the brain will adapt after a while, and you’ll again be hitting the buttons with ease.

So, when you change your monitor with one that has a significantly different resolution or size of the screen, just open the mouse settings panel and experiment a little with its sensitivity. If you’ve got an old mouse with a low optical resolution, you may want to purchase a newer and more sensitive one. It is going to move smoothly even if you select a high speed in its settings. An extra $20 for a good mouse is a tiny sum in comparison with the price of a new monitor.

So, it’s clear with office work, let’s now consider movies. Theoretically, there may be a problem due to non-synchronization of audio (which goes without any delays) and video (which is delayed by 47 milliseconds by the monitor). But as you can easily check out in any video editor, you can only spot non-synchronization in movies when there is a difference of 200-300 milliseconds between video and audio. This is many times more than the monitor’s delay. 47 milliseconds is just a little over the period of one movie frame (this period is 40 milliseconds at a frame rate of 25fps). It’s impossible to catch such a small difference between video and audio.

Thus, it is only in games that the monitor’s input lag may be an important factor, yet I think that most people who are discussing this problem at forums are inclined to overstate it. Those 47 milliseconds won’t make a difference for most people in most games, except for a situation when you and your enemy spot each other at the same moment in a multiplayer game. In this case the reaction speed is crucial and the additional 47ms delay may be significant. But if you are anyway half a second slower in spotting your opponent, the milliseconds won’t save you.

The monitor’s input lag does not affect the aiming accuracy in first-person shooters or the steering accuracy in racing sims because it is again learned movements that you repeat: our nervous system isn’t fast enough to press the Fire button the moment the sight catches the enemy, but it can easily adapt, for example, to the necessity of sending the finger a Press command before the sight is at the enemy. So, any additional short-time lags just make the brain adjust itself once again for the new conditions. If a user who’s got used to a monitor with an input lag begins to use a monitor without a lag, he will have to adjust himself, too, and the new monitor will feel suspiciously inconvenient to him at first.

I’ve also read forum posts saying that it was just impossible to play games on a new monitor due to the input lag, but this had a very simple explanation. The user had changed the resolution from 1280×1024 to 1680×1050 but hadn’t thought that his old graphics card wouldn’t be as fast in the higher resolution. So, be careful when reading Web forums! You can’t know the level of technical competence of people who are posting on them and you can’t say beforehand if things that seem obvious to you are as obvious to them.

The input lag problem is also aggravated by two things common of all people. First, many people are inclined to search for complex explanations of simple things. They prefer to think that a light dot in the sky is a “flying saucer” rather than an ordinary weather balloon or that the strange shadows in the NASA photographs of the Moon are proof that men have never landed there rather than are indicative of the unevenness of the moonscape. Any person who’s ever taken an interest in the activities of UFO researchers and other folks of that kind will tell you that most of their alleged discoveries are the result of thinking out excessively complex theories instead just looking for simple, earthly explanations of phenomena.

A comparison between UFO researchers and PC users who are purchasing a monitor may seem strange, yet the latter are prone to behave in the same manner at a Web forum. Most of them won’t even consider the fact that the feeling from a monitor will totally change if its resolution and size are different. Instead, they are earnestly discussing how a negligible lag of 47 milliseconds may affect the mouse pointer’s movement.

And second, people are prone to auto-suggestion. Take two bottles of beer of different brands, one cheap and another expensive, and pour the same brand of beer into them. Most people will say the beer from the bottle with the expensive brand label tastes better, but if the labels are removed – the opinions will divide equally. The problem is our brain cannot fully disengage itself from various external factors. So when we see a luxurious packaging, we subconsciously begin to expect a higher quality of the contents of such a package and vice versa. To avoid this, all serious subjective comparisons are performed with a blind test method. All the tested samples go under different numbers and none of the testing experts knows which number corresponds to which brand.

It’s the same with the input lag thing. A person who has just bought or is going to buy a new monitor, goes to a monitor-related forum to find multiple-page discussions of such horrors of the input lag as slow movements of the mouse, total non-playability, etc. And there surely are a few people there who claim they can see the lag with their own eyes. Having digested all that, the person goes to a shop and begins to stare at the monitor he’s interested in thinking, “There must be a lag because other people see it!” Of course, he soon begins to see it himself – or rather to think he’s seeing it. Then he goes back home and writes to the forum, “Yeah, I’ve checked that monitor out – it’s really retarded”. You can even read some funny posts like, “I’ve been sitting at the discussed monitor for two weeks, but it’s only now, after I’ve read the forum, that I see the lag”.

Some time ago there were video clips at YouTube in which they were moving a program window up and down with a mouse on two monitors standing next to each other and working in Desktop extension mode. It was perfectly clear that the window on the monitor with an input lag was moving with a delay. The clips looked pretty, but think: a monitor with a refresh rate of 60Hz is shot on a camera with a matrix refresh rate of 50Hz. This is then saved into a video file with a frame rate of 25Hz and this file is then uploaded to YouTube which may well encode it once again in there, without informing you. Is there much of the original left after all that? I guess, not. I saved the clip from YouTube and opened it in a video editor and watched it frame by frame. And I saw that at some moments there was a much bigger difference than the mentioned 47 milliseconds between the two monitors, but at other moments the windows were moving in sync as if there was no lag at all. So, I just can’t take this as a convincing and coherent proof.

Here’s my conclusion to this section:

- A) There is indeed an input lag on some monitors. The maximum value of the lag that I’ve seen in my tests is 47 milliseconds

- B) A lag of this value cannot be noticed at ordinary work or in movies. It may make a difference in games for well-trained gamers, but wouldn’t matter for most other people even in games.

- C) You may feel discomfort after changing your monitor with a model that has a larger diagonal and resolution due to low speed or sensitivity of your mouse, low speed of your graphics card or due to the different size of the screen. However, many people read too much of forums and are inclined to blame the input lag as the cause of any discomfort they may feel with their new monitor.

Cutting it short, the problem does exist theoretically, but its practical effect is greatly overstated. An absolute majority of people won’t ever notice a lag of 47 milliseconds, let alone smaller lags, anywhere.

Contrast Ratio: Specified, Actual and Dynamic

The fact that a good CRT monitor provides better contrast than a good LCD monitor has long been regarded as an a priori truth that doesn’t need more proof. We can all see how bright a black background seems on LCD monitors in darkness. And I don’t want to deny this fact. It’s hard to deny what you can see with your own eyes even when you are sitting at a newest S-PVA matrix with a specified contrast of 1000:1.

The specified contrast is usually measured by the LCD matrix maker rather than by the manufacturer of the monitor. It is measured on a special testbed by applying a certain signal and at a certain level of backlight brightness. The contrast ratio is the ratio of white to black.

It may be more complicated in an LCD monitor because the level of black may be determined not only by the matrix characteristics, but sometimes by the monitor’s own settings, particularly in models where the brightness parameter is regulated by the matrix rather than by the backlight lamps. In this case, the monitor’s contrast ratio may turn to be lower than the matrix’s specified contrast ratio – if the monitor is not set up accurately. This effect can be illustrated by Sony’s monitors that allow to control brightness either with the matrix or with the lamps. If you increase the matrix brightness above 50% on them, the black color soon degenerates into gray.

There is an opinion that the specified contrast ratio can be improved by means of backlight brightness – that’s why many monitor manufacturers employ so bright lamps. But I want to tell you that this opinion is totally wrong. When the backlight brightness is increased, the level of both white and black grows up at the same rate, so their ratio, which is the contrast ratio, does not change.

Well, this all has been told a lot of times before, so let’s better move on to other questions.

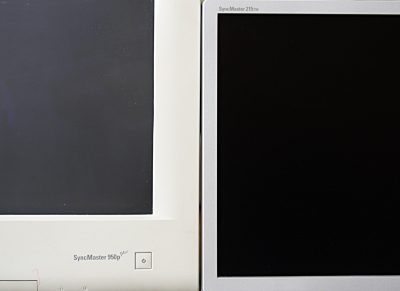

Surely, the specified contrast ratio of modern LCD monitors is yet too low to match good CRT monitors – their screens are noticeably bright in darkness even if the image is all black. But we usually use our monitors not in darkness, but at daylight, sometimes very bright daylight. It’s clear that the real contrast ratio is going to differ from the specified one under such conditions because the monitor’s own light is added up with the external light it reflects.

Here is a photo of two monitors standing next to each other. One is a CRT monitor Samsung SyncMaster 950p+ and the other is an LCD monitor SyncMaster 215TW. Both are turned off and there is a normal ambient lighting, sunlight on a cloudy day. It can be easily seen that the screen of the CRT monitor is much brighter under than the screen of the LCD monitor. That’s exactly the opposite of what we see in darkness when the monitors are both turned on.

It’s easy to explain: the phosphor they use in cathode-ray tubes is light gray by itself. To make the screen darker, tone film is put on the glass. The phosphor’s own light goes through that film only once, but the external light has to pass it two times (on its way to the phosphor and then, bouncing back from it, to the outside, into the user’s eye). Thus, the intensity of the external light is weakened much more by the film.

CRT monitors can’t have an absolutely black screen. As the film becomes more opaque, the brightness of the phosphors must be increased since it is weakened by the film, too. And this brightness is limited to a rather moderate level with CRTs, because when the current of the cathode beam is increased, its focusing worsens, producing a fuzzy, unsharp image. That’s the reason why the maximum reasonable brightness of CRT monitors is not higher than 150 candelas per sq. meter.

With LCD monitors, the external light has almost nothing to get reflected from. LCD matrixes don’t have any phosphors, only layers of glass, polarizers and liquid crystals. A small portion of light is reflected from the external surface of the screen, but most of it penetrates the screen and is then lost forever. That’s why the screen of a turned-out LCD monitor looks almost perfectly black at daylight.

So, a CRT monitor is much brighter than an LCD monitor at daylight when they are both turned off. If we now turn the monitors on, the LCD will get a bigger addition to the level of black than the CRT due to its lower specified contrast ratio, yet it will still remain darker than the CRT. But now if we “turn out” the daylight by drawing the blinds, we’ll get just the opposite – the CRT monitor will yield a deeper black!

Thus, a monitor’s real contrast depends on ambient lighting. The more intensive it is, the better for LCD monitors, which provide a high-contrast picture even under bright lighting whereas CRT monitors show then a faded picture. But CRT monitors have an advantage in darkness.

By the way, this partially explains why monitors with a glossy coating of the screen look so good, at least in the shop window. The ordinary matte coating disperses the light it receives in all directions while the glossy coating reflects it in one direction, like a mirror. So if the source of light isn’t behind your back, the matrix with a glossy coating will seem to have more contrast than a matte matrix. But if the light source is behind you, it’s all different: the matte screen is still dispersing the light more or less uniformly, while the glossy coating is reflecting it right into your eyes.

What I am talking now refers not only to CRT and LCD monitors, but to all other display technologies. For example, the forthcoming SED panels from Toshiba and Canon have a fantastic specified contrast ratio of 100000:1 (in other words, their black color is absolutely black in darkness), but are going to fade at daylight just as the CRT does. SED panels use the same phosphors that are shining when being bombarded with a cathode ray and there is a layer of black tone film in front of them. It is impossible to decrease the transparency of the film (to increase the contrast ratio) with CRTs due to the ray becoming unfocused, and it is going to be impossible with SED panels due to the reduction of the service life of the emitting cathodes at higher currents.

Recently there have appeared LCD monitors with high specified contrast, up to 3000:1, but using the same matrixes as are employed in the monitors with more traditional numbers in the specs. It’s because the so-called dynamic contrast ratio rather than the ordinary contrast ratio is specified.

The concept is in fact simple. Each movie has both bright and dark scenes. In both cases our eyes perceive the brightness of the whole picture at once. So if most of the screen is bright, the level of black in the small dark areas doesn’t matter, and vice versa. It is reasonable then to automatically adjust the backlight brightness depending on the onscreen image. The backlighting can be made less intensive in dark scenes, to make them even darker, and more intensive, up to the maximum, in bright scenes, to make them even brighter. This automatic adjustment is referred to as dynamic contrast ratio.

The official numbers for dynamic contrast are arrived at in the following manner: the level of white is measured at the maximum of backlight brightness and the level of black is measured at its minimum. So if the matrix has a specified contrast ratio of 1000:1 and the monitor’s electronics can automatically change the intensity of backlight brightness by 300%, the resulting dynamic contrast is 3000:1.

It should be made clear that dynamic contrast mode is suitable only for movies and, perhaps, for games, but gamers often prefer to increase brightness in dark scenes for an easier orientation in the surroundings rather than to lower it. For ordinary work this mode is not just useless, but often irritating.

Of course, the screen contrast – the ratio of white to black – is never higher than the monitor’s static specified contrast ratio at any given moment, but the level of black is not important for the eye in bright scenes and vice versa. That’s why the automatic brightness adjustment in movies is indeed helpful and creates an impression of a monitor with a greatly enhanced dynamic range.

The downside is that the brightness of the whole screen is changed at once. In scenes that contain both light and dark objects in equal measure, the monitor will just select some average brightness. Dynamic contrast doesn’t work well on dark scenes with a few small, but very bright objects (like a night street with lamp-posts) – the background is dark, and the monitor will lower brightness to a minimum, dimming the bright objects as a consequence. But as I said above, these drawbacks are negligible due to the peculiarities of our perception and are anyway less annoying than the insufficient contrast of ordinary monitors. I guess most users are going to like the new technology.

Color Reproduction: Color Gamut and LED Backlighting

About two years ago I wrote in my articled called Parameters of Modern LCD Monitors that the color gamut parameter was actually insignificant for monitors just because all monitors had the same gamut. Fortunately, things have changed for the better in this area since. Monitors with an enhanced gamut have emerged in shops.

So, what is the color gamut?

As a matter of fact, the human eye sees light in a wavelength range of 380-700nm, from violet to red. There are four kinds of light-sensitive detectors in the eye: one type of rods and three types of cones. The rods have excellent sensitivity but make no difference between wavelengths. They perceive the whole range at once and endow us with black-and-white vision. The cones have worse sensitivity (and do not work in twilight), but give us color vision under proper lighting. Each type of cones is sensitive to a certain wavelength range. If our eye receives a ray of monochromatic light with a wavelength of 400nm, only one type of cones will react – the one that is responsible for blue. The other two types, red and green, won’t notice anything. Thus, the different types of cones play the same role as the RGB filters standing in front of a digital camera’s sensor.

It may seem that our color vision can be easily described with three numbers, each for a signal from a certain type of cones, but that’s not so. Experiments back at the beginning of the last century showed that our eyes and brain process information in a less straightforward manner, and if color perception is described with three coordinates (red, green and blue), it turns out that the eye can easily perceive colors which have a negative red value in this system! Thus, it is impossible to fully describe the human vision with the RGB model.

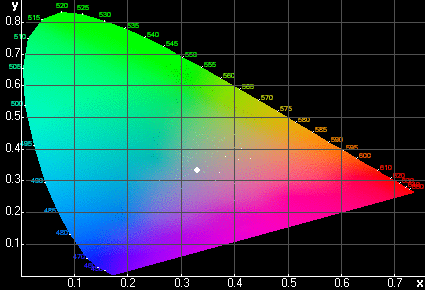

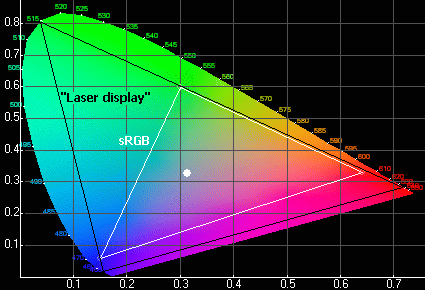

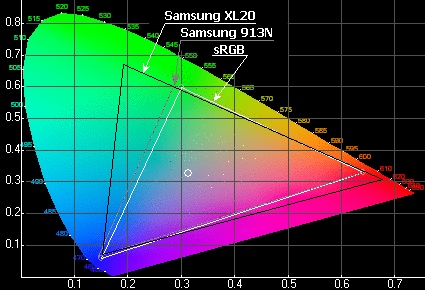

The experiments eventually led to the creation of a system that encompassed all the range of colors our eyes can perceive. Its graphical representation is called a CIE diagram and it is shown in the picture above. All the colors perceived by the eye are within the colored area. The borderline of this area is made up of pure, monochromatic colors. The interior corresponds to non-monochromic colors, up to white. It is marked with a white dot. As a matter of fact, white color is a subjective notion for the eye as we can perceive different colors as white depending on the conditions. The white dot in the CIE diagram is the so-called flat spectrum dot with coordinates of x=y=1/3. Under ordinary conditions, this color looks very cold, bluish.

Any color perceived by the eye can be identified with two coordinates (x and y) on the CIE diagram. But what’s the most exciting thing is that we can create any color by mixing up monochromatic colors in some proportion because our eye is totally indifferent to what spectrum the light has. It’s only important how each type of the detectors, rods and cones, has been excited by that light.

If the human vision were successfully described with the RGB model, it would suffice to take three sources (red, green and blue) and mix them in necessary proportions to emulate any color the eye could see. But as I said above, we can see more colors than the RGB model can describe, so there is another question: having three sources of different colors, what other colors can we make by mixing the sources?

The answer is simple: if you mark points with the coordinates of the basic colors in the CIE diagram, everything you can get by mixing them up is within the triangle you can draw by connecting the points. This triangle is referred to as a color gamut.

It is the so-called laser display that can produce the biggest color gamut for a system with three basic colors. In such a display, the basic colors are formed by three lasers (red, green and blue). A laser has a very narrow radiation spectrum, extremely monochromatic, so the coordinates of the corresponding basic colors lie exactly on the diagram’s border. It’s impossible to move them out beyond this border because points out there do not correspond to any light. And if the coordinates are shifted into the diagram, the area of the gamut triangle is reduced.

The picture shows that even a laser display cannot reproduce all the colors the human eye can see, although it is quite close to doing that. To enhance the gamut, more basic colors can be used (4, 5 or more) or a hypothetical system can be created that would be able to change the coordinates of the basic colors “on the fly”. But the former solution is too technically complex today whereas the latter is implausible.

Well, it’s too early yet for us to grieve about the drawbacks of laser displays. We don’t use them as yet, and today’s monitors have a far inferior gamut. In other words, in actual monitors, both CRT and LCD (except for some models I’ll discuss below), the spectrum of each of the basic colors is far from monochromatic. In the terms of the CIE diagram it means that the vertexes of the triangle are shifted from the border of the diagram towards its center, and the resulting area of the triangle is greatly reduced.

There are two triangles in the picture above, one for a laser display and another for the so-called sRGB color space. To put it short, the second triangle corresponds to the typical gamut of today’s LCD and CRT monitors. Not an encouraging picture, is it? I fear we won’t see a pure green color in near future.

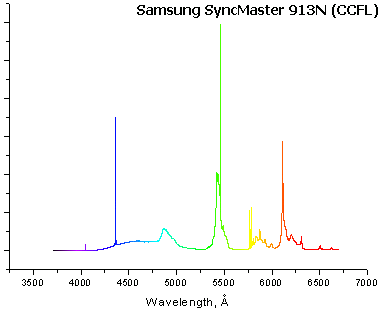

Talking about LCD monitors, the reason for this is the poor spectrum of backlight lamps in LCD panels. Cold-cathode fluorescent lamps that are employed in them emit radiation in the ultraviolet range which is transformed into white color with the phosphors on the lamp’s walls.

In nature, it is various hot bodies that are the usual source of light for us. First of all, it is the Sun. The radiation spectrum of such a body is described by Planck’s law and the main thing is that it is continuous, with all wavelengths, and the radiation intensity at adjacent wavelengths differs but slightly.

A fluorescent lamp, just like any other gas-discharge light source, produces a linear spectrum with no radiation at some wavelengths and with a manifold difference in intensity between parts of the spectrum that are only a few nanometers apart. Our eye is insensitive to the type of the spectrum, so it perceives the Sun and the fluorescent lamp as radiating identical light. But it’s different in the PC monitor.

So, there are several fluorescent lamps standing behind the LCD matrix. They are shining through the matrix. On the other side, there is a grid of color filters – red, green and blue – that make up triads of sub-pixels. Each filter cuts a portion of spectrum, corresponding to its pass-band, out of the lamp’s light. This portion must be as narrow as possible to achieve the largest color gamut. Suppose there is a peak of 100 imaginary units at a wavelength of 620nm in the backlight spectrum. We use a red sub-pixel filter with a maximum of transmission at 620nm and seem to get the first vertex of the color gamut triangle, right on the border of the diagram. But it’s not that simple in reality.

The phosphors of modern fluorescent lamps are a tricky thing. We can’t control their spectrum at will. We can only take some phosphor that suits our needs out of the set of phosphors chemistry knows. The best one we can take has a 100-unit peak at a wavelength of 575nm (it’s yellow). And the 620nm red filter has a transmission factor of 1/10 of the maximum at 575nm.

What does this mean, ultimately? It means we’ve got two wavelengths instead of one at the filter’s output: 620nm with an intensity of 100 units and 575nm with an intensity of 100*1/10 = 10 units (the intensity in the lamp’s spectrum is multiplied by the filter’s transmission factor at this wavelength). That’s not a negligible value.

As a result, the “extra” peak in the lamp spectrum that passes partially through the filter yields a polychromatic color (red with a tincture of yellow) instead of a monochromatic red. It means that in the CIE diagram the corresponding vertex of the color gamut triangle is shifted from the bottom border upwards, closer to yellow hues, thus reducing the total area of the triangle.

Seeing is believing, so I’d like to illustrate my point. I asked for help from the plasma physics department of Skobeltsyn Nuclear Physics Research Institute and they offered me an opportunity to use their automatic spectrographic system. The system was designed to research and control the process of growth of artificial diamond films in SHF plasma by the emission spectrums of the plasma, so it should deal with an ordinary LCD monitor with ease.

So I turned the system on (the big angular box is a monochromator Solar TII MS3504i; its input port is on the left and a LED with an optical system is fastened next to that input; the orange cylinder of the photo-sensor can be seen on the right – it is fastened on the monochromator’s output port; the system’s power supply is at the top)…

I adjusted the height of the input optical system and connected the other end of the LED to it:

And finally I put it in front of the monitor. The system is PC-controlled, so the whole process of taking the spectrum in the range I was interested in (from 380 to 700nm) was accomplished in a couple of minutes.

The X-axis shows wavelengths in angstrom (10A = 1nm), the Y-axis shows intensity in some imaginary units. For better readability, the diagram is painted according to how our eye perceives the different wavelengths.

This test was performed on a Samsung SyncMaster 913N monitor, a rather old low-end model on a TN matrix, but that doesn’t matter. The same lamps with the same spectrum are employed in a majority of modern LCD monitors.

What does the spectrum show? It illustrates what I’ve said above: besides the three peaks corresponding to blue, red and green sub-pixels, there is some junk in the area of 570-600nm and 480-500nm. It is these unnecessary peaks that shift the vertexes of the color gamut triangle up to the center of the CIE diagram.

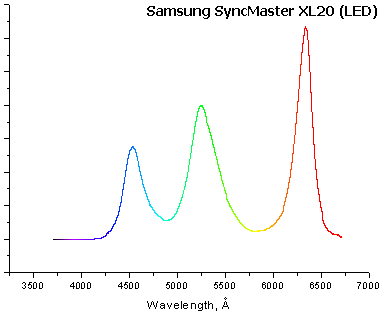

Surely, the best way to solve the problem is to abandon cold-cathode fluorescent lamps altogether. Some manufacturers have done that, like Samsung did with its SyncMaster XL20. Instead of fluorescent lamps this monitor uses a block of LEDs of three colors as backlight (the LEDs are red, blue and green because the use of white LEDs doesn’t make sense – red, green and blue colors would anyway have to be cut out of the backlight spectrum with filters). Each LED has a neat, flat spectrum that precisely coincides with the pass-band of the appropriate filter and has no extra peaks.

That’s a treat for the eye, isn’t it?

The spectrum of each LED is rather wide, so their radiation can’t be called strictly monochromatic and they can’t match a laser display. Yet they are much better than the spectrum of CCF lamps. Note the neat smooth minimums at those two places where the CCF lamps have unnecessary peaks. Note also that the maximums of all the three peaks have changed. The maximum of red is now much closer to the borderline of the visible spectrum – this should increase the color gamut.

And here is the color gamut itself. The gamut triangle of the SyncMaster 913N isn’t much different from the humble sRGB and green suffers the most in comparison with the gamut of the human eye. But the gamut of the XL20 cannot be mistaken for sRGB – it covers much more of green and blue-green hues and includes a deep red. That’s not a laser display, yet it’s impressive anyway.

Well, we won’t have home monitors with LED-based backlighting for some time yet. The 20” SyncMaster XL20 is expected to arrive in this spring at a price of $2000 while the 21” NEC SpectraView Reference 21 LED costs three times that money! Only polygraphists (these models are mainly targeted at) are accustomed to such prices, but not home users.

However, there is a hope for us, too. There have arrived monitors with fluorescent backlight lamps that have new phosphors which don’t have so many unnecessary peaks in the spectrum. Such lamps aren’t as good as LEDs, but are considerably better than the old lamps. The gamut they provide is somewhere in between the gamut of models on the old lamps and with LED-based backlighting.

To compare color gamuts in numbers, it is a common practice to state how much percent the given monitor’s gamut is in some standard color gamut. The sRGB color space is too small, so they often use NTSC. Thus, ordinary sRGB monitors have a gamut of 72% NTSC. The monitors with improved backlight lamps have a gamut of 97% NTSC and monitors with LED-based backlighting – 114% NTSC!

What does the enhanced color gamut give us? The manufacturers of monitors with LED-based backlighting usually post photographs of their new models next to older ones in press releases and simply increase the color saturation of the new models. That’s not correct since only the saturation of those colors that don’t fit within the old monitors’ gamut is improved. But viewing such press releases on your old monitor, you won’t ever notice the difference because your monitor cannot reproduce such colors anyway. It’s like trying to watch a report from a color TV exhibition on a black-and-white TV-set. On the other hand, the manufacturers have to show the advantages of the new models one way or another in their press releases.

There is a difference in practice, however. I wouldn’t say a great difference, yet the enhanced-gamut models are certainly better. They produce very pure and deep red and green colors. If you work for a while at a monitor with LED backlighting and then return to a monitor with CCF lamps, you involuntarily try to add saturation until you realize that it won’t help. Red and green will anyway remain somewhat dull and muddy in comparison with the LED monitor.

Unfortunately, the models with the improved backlight lamps have been spreading out not in the way they should have done. For example, Samsung first employed such lamps in its TN-based SyncMaster 931C. Low-end monitors on TN matrixes wouldn’t get worse for having an enhanced color gamut, but I guess few people buy such models for work with color due to their obviously poor viewing angles. Well, all the major manufacturers of LCD panels – LG.Philips LCD, AU Optronics and Samsung – have already developed S-IPS, MVA and S-PVA models panels with diagonals of 26-27” and with the new backlight lamps.

Lamps with the new phosphors will eventually oust the older ones and we’ll move beyond the humble sRGB gamut for the first time in the history of color PC monitors.

Color Reproduction: Color Temperature

As I mentioned in the previous section, the term white color is subjective and depends on external conditions. Now I’m going to dwell upon this topic for longer.

So, there is actually no white color that could be regarded as an etalon. We could take a flat spectrum (with equal intensity of each wavelength in the optical range) as such, but there’s one problem. This color would look a cold, bluish color to the human eye in most cases. It wouldn’t be white.

As a matter of fact, our brain regulates the white balance, just like you do with your camera, depending on ambient lighting. An incandescent lamp looks just a little yellowish in the evening at home, although the same lamp will look totally yellow if you turn it on in a slight shadow on a sunny day. It’s because our brain adjusts its white balance for predominant lighting, which is different in these two cases.

The desired white color is commonly described with the term color temperature. It is the temperature an ideal blackbody must have to radiate a light of the corresponding color. For example, the surface of the Sun is about 6000K hot. And the color temperature of daylight on a sunny day is indeed defined to be 6000K. The filament of an incandescent lamp is about 2700K hot and the color temperature of its light is 2700K, too. Funnily enough, the higher an object’s temperature is, the colder its light seems to us because blue tones become predominant.

The notion of color temperature becomes somewhat irrelevant for line-spectrum sources like the above-mentioned CCF lamps, because their radiation cannot be compared with the continuous spectrum of an ideal blackbody. So when it comes to such light sources, we have to consider how their spectrum is perceived by our eye, and the instruments for measuring the color temperature must perceive the color just like the eye does.

You can set up the color temperature of your monitor from its menu. You’ll find some three or four preset values in there (much more in certain models) along with the option of adjusting the levels of the basic RGB colors. The latter option isn’t convenient in comparison with CRT monitors in which it was the temperature rather than the RGB levels that you could adjust, but it is a kind of a standard option for LCD monitors, except for some expensive models. The goal of color temperature setup on a monitor is obvious. It is the ambient lighting that serves as the sample for setting up the white balance, so the monitor must be set up in such a way that its white color looked like white, without a bluish or reddish tone.

The most disappointing thing is that the color temperature on many monitors varies greatly between different levels of gray. It’s clear that gray differs from white with brightness only, so we can as well be talking about a gray balance rather than a white balance, and it would be even more correct. And the balance for different levels of gray proves to be different on many monitors.

Above you can see a photograph of the screen of an ASUS PG191 monitor with four gray squares of different brightness. To be exact, there are three versions of the photograph put together. In the first of them, the gray balance is based on the rightmost (fourth) square, in the second – on the third square, in the last one – on the second square. It’s wrong to say that one version is correct and the others are not. They are all incorrect because the monitor’s color temperature shouldn’t depend on what level of gray you measure it by. This is not so in this case. This situation can only be corrected with a hardware calibrator – not with the monitor’s settings.

That’s why I publish a table with measured color temperatures of four different levels of gray in my monitor reviews. If the temperatures differ by much, the onscreen image will have a tincture of different colors like in the picture above.

Workplace Ergonomics and Monitor Setup

Although this topic isn’t directly related to monitors’ parameters, I want to end this review with it since practice suggests that many people, especially those that have got used to CRT monitors, find it problematic to set up their LCD monitor.

First, the positioning in space. The monitor should be placed at a distance of your stretched-out arm, or even farther if the monitor has a long screen diagonal. You shouldn’t put the monitor too close to you, so if you are considering a model with a small pixel size (17” monitors with a resolution of 1280×1024, 20” models with a resolution of 1600×1200 or 1680×1050, and 23” models with a resolution of 1920×1200), make sure the image isn’t too small and illegible for you. If you’ve got any apprehensions, consider monitors with the same resolution, but a longer diagonal because there’s in fact only one solution left – selecting larger fonts and interface elements in Windows (or the OS you are using). This doesn’t look pretty in some programs.

The monitor’s height should be such that the top edge of the screen was at your eye level. In this case your sight is directed downwards at work and the eyes are half covered with the lids, which prevents them from drying (it is a fact that we are blinking too rarely while working). Many low-end models, even with diagonals of 20” and 22”, have stands without height adjustment. If you can choose, try to avoid such models. In monitors that offer screen height adjustment, check out the range of this adjustment. Almost all modern monitors allow to replace their native stand with a standard VESA mount. You may want to use this option because such a mount allows you to place the screen anywhere and also set its height just as you like.

An important thing is the lighting of the workplace. It is unadvisable to work in full darkness. The sudden transition between a bright screen and a dark background strains the eyes. Some background lighting like a desk or a wall lamp should be enough for watching movies and playing games, but for work a normal lighting of the workplace should be organized. You can use incandescent or fluorescent lamps with an electronic ballast (compact lamps for an E14 or E27 socket, or an ordinary “tube”), but you should avoid daylight lamps with an electromechanical ballast. Such lamps are flickering at a double frequency of the mains voltage (i.e. at 100Hz if you’ve got 50Hz in the mains) and this flickering may interfere with the monitor’s refresh rate or the flickering of the backlight lamps, which results in very unpleasant effects. In large office rooms they use blocks of daylight lamps in which the lamps are flickering in different phases (this is achieved by connecting the lamps to different phases of the mains supply or by using phase-shifting circuits), which greatly reduces the flicker. In the home, there is usually only one such lamp, so there’s one way to avoid the flicker – use a modern lamp with an electronic ballast.

Having placed your monitor in physical space, you should now configure it.

As opposed to a CRT, an LCD monitor has only one resolution in which it works well. In other resolutions the LCD monitor works poorly, so you should select its native resolution in the graphics card’s settings right away. That’s why you should make sure before the purchase that the native resolution of the monitor is not too large or too small for you. Otherwise, you may want to choose a model with a different screen diagonal or resolution.

Today’s LCD monitors have in fact only one refresh rate, 60Hz. Although 75Hz or even 85Hz may be declared for many models, the monitor’s matrix still works at 60Hz if you select those settings. The monitor’s electronics discards the “extra” frames. But there is actually no sense in selecting high frequencies because there is no flickering with LCD monitors, as opposed to CRT ones.

If your monitor offers two inputs, a digital DVI-D and an analog D-Sub, you should prefer the former. It provides a higher-quality picture at high resolutions and simplifies the setup procedure. If your monitor only has an analog interface, you should turn it on and select the native resolution and then open some sharp, high-contrast image on it, for example a page with text. Make sure there are no artifacts like flickering, waves, noise, shadowing around symbols, etc. If there is something like that, press the auto-adjustment button. This feature works automatically on many models as you change the resolution, but the smooth low-contrast picture on the Windows Desktop may not be enough for a successful setup, so you have to start the procedure manually once again. There are no such problems with DVI-D, that’s why this connection is preferable.

Almost all modern monitors have too much brightness, like 200 candelas per sq. m, at their default settings. This brightness suits for work at bright daylight or for watching movies, but is too high for work in an office room. For comparison, the typical brightness of a CRT monitor is 80-100 candelas per sq. m. So, the first thing you should do after you purchase your new monitor is to select an acceptable brightness. Don’t hurry up and try to do everything in a moment. And don’t try to make everything look “exactly as on the old monitor” because your old monitor was pleasing for your eyes not because your eyes had got used to it, not because it was perfectly set up or provided a high image quality. A person who has transitioned to a new LCD monitor from an old CRT that yielded a dull image is going to complain about excessive brightness and sharpness. But if you put his old CRT before him after a month, he won’t be able to bear it because its image is too dull and dark.

So, if your eyes feel any discomfort, try to adjust the monitor’s settings smoothly and in relation to each other. Lower the brightness and contrast settings a little and try to work. If you still feel discomfort, lower them a little more. Give your eyes some time to get used to the picture after each change of the settings.

There is one good trick of quickly selecting an acceptable level of brightness on an LCD monitor. Just put a sheet of white paper next to the monitor and adjust the monitor’s brightness and contrast settings in such a way that the white color on the screen looked as bright as the paper. This trick only works if your workplace is properly lit.

You should also experiment with the color temperature. Ideally, it should be set up in such a way that white on the monitor’s screen looked indeed white rather than bluish or reddish. But this perception depends on the type of ambient lighting whereas monitors are initially set up for some averaged conditions (and many models are even set up inaccurately). Try to choose a warmer or colder color temperature and move the RGB level sliders in the monitor’s menu. This may produce a positive effect, especially if the monitor’s default color temperature is too high. The eyes react worse to cold colors than to warm ones.

Unfortunately, many uses don’t follow even these simple recommendations. This leads to multi-page forum threads like “Help me find a monitor that wouldn’t strain my eyes”. And they even compile long lists of monitors that don’t strain the eyes. I personally have worked at dozens of monitors and my eyes have never been hurt by any of them, except for a couple of super-inexpensive models which had problems with image sharpness or had a very poor color reproduction setup. Your eyes are hurt by incorrectly selected settings, not by the monitor as such!

But Web discussions may go totally absurd. They discuss the influence of the flicker of backlight lamps (it’s usually 200-250Hz in today’s monitors – the eye can’t perceive that), the influence of the polarized light, the influence of too-low or too-high (tastes differ!) contrast of today’s LCD monitors. I even met one thread somewhere which was concerned with the influence of the line spectrum of the backlight lamps on the eyes. But that’s rather a topic for another article – I will perhaps write for the next Fools’ Day. 🙂